Getting Start

This quick guide will show you how to use FewBox to manage, install & un-install your microservice on your cloud-native platform. You'll also get a few simple pointers on setting yourself up for productivity.

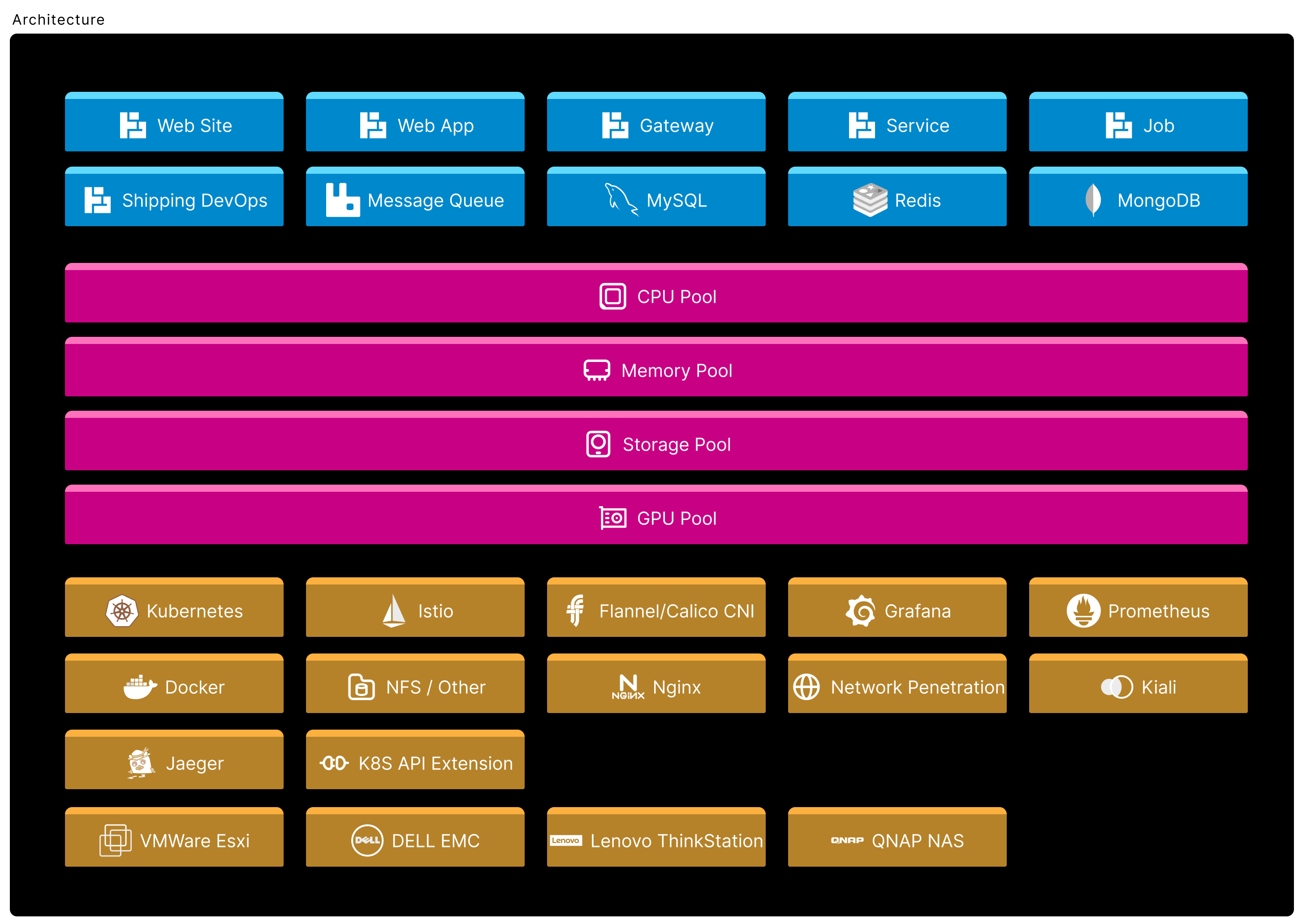

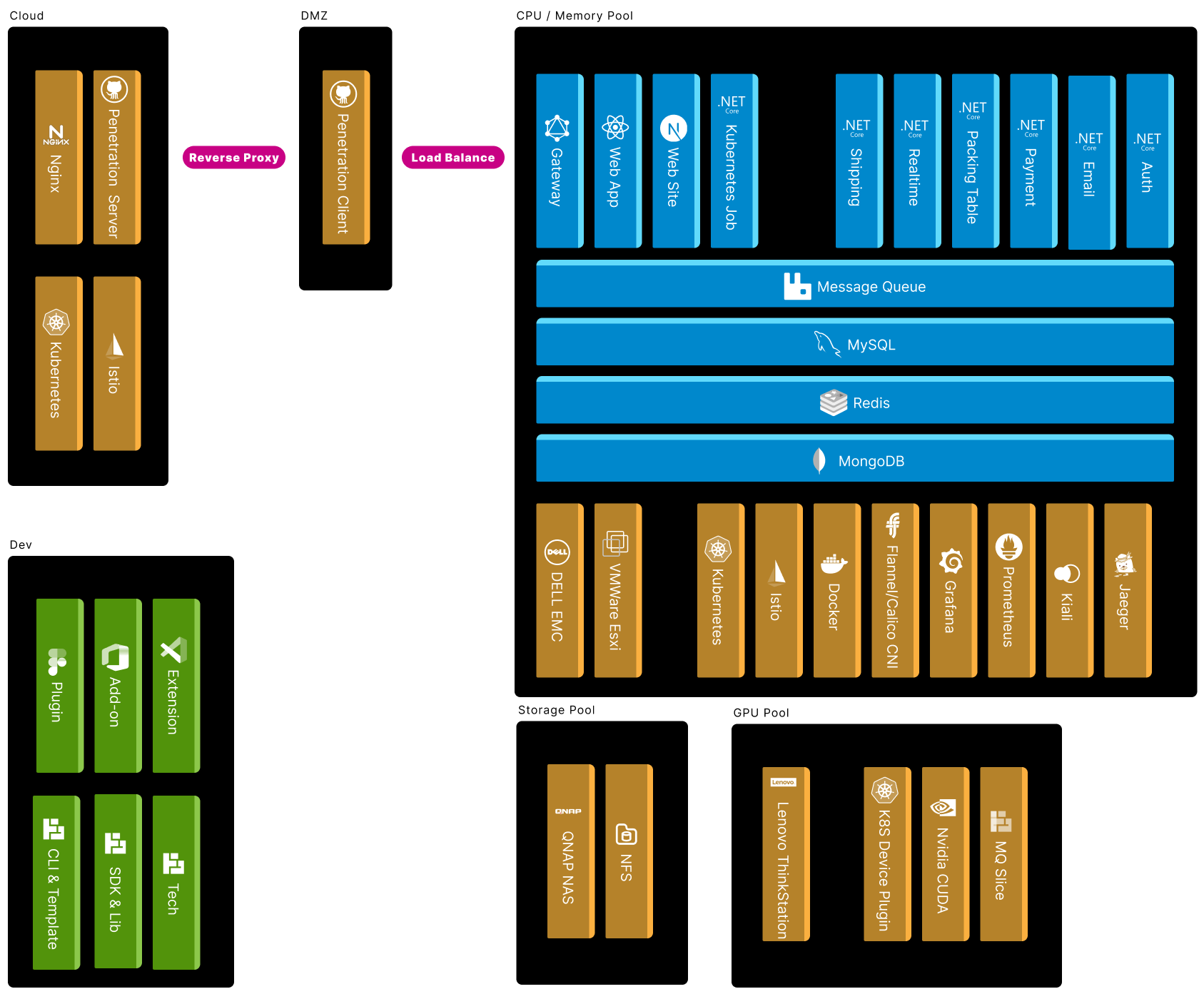

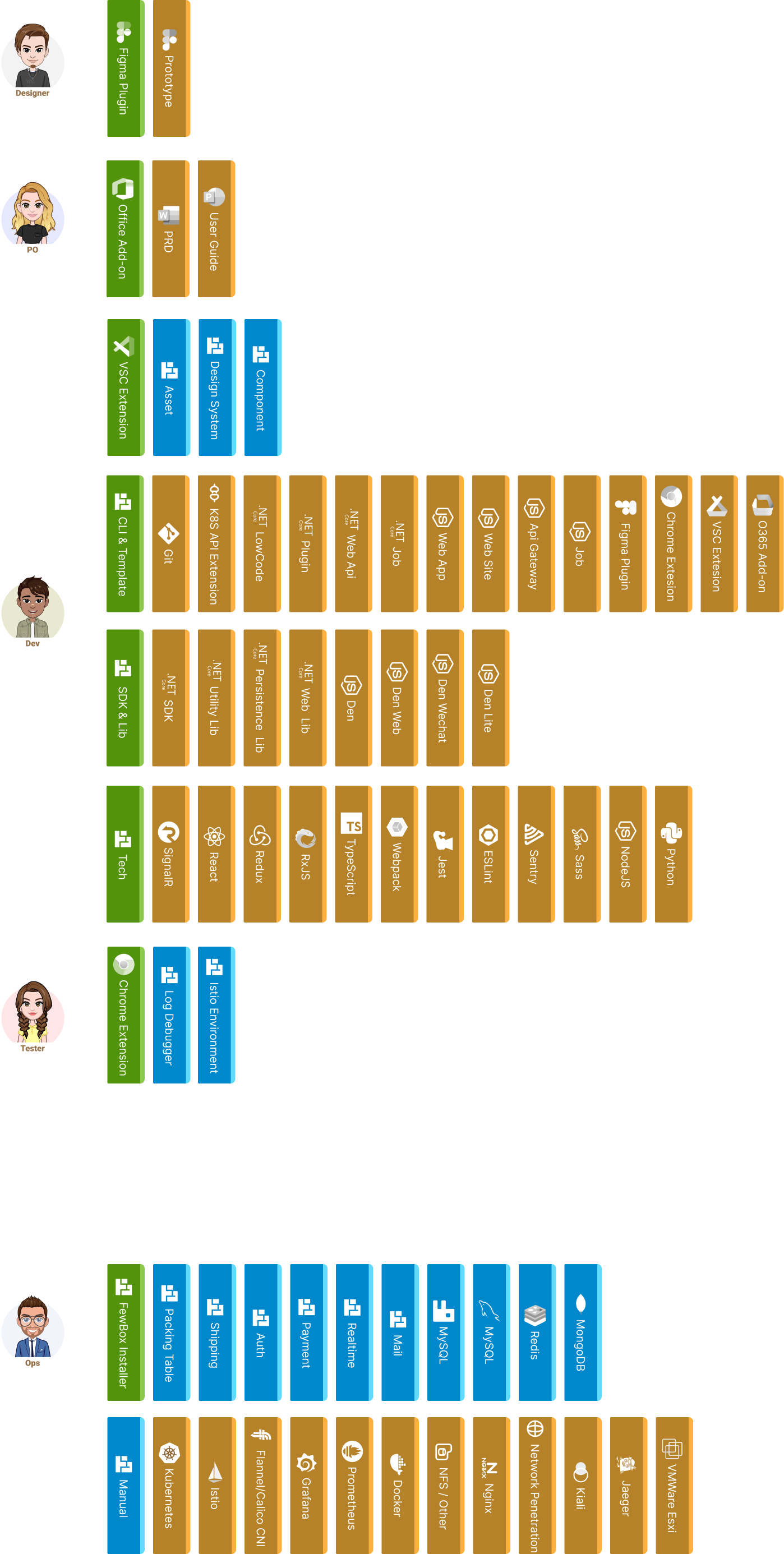

Overview

Prerequisites

-

Install Docker

shell### Remove old version ### yum remove docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-selinux \ docker-engine-selinux \ docker-engineshell### Install ### yum install yum-utils yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum install docker-ce systemctl enable docker && systemctl start dockershell### Setting ### cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "registry-mirrors": [ "https://hub-mirror.c.163.com", "https://mirror.baidubce.com" ], "insecure-registries": ["192.168.1.38:5000"] } EOF # Check docker info | grep Cgroup -

Install Kubernetes

Kubeadm

shellvi /etc/yum.repos.d/kubernetes.repoini[kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0shell### Install ### # kubelet # yum --showduplicates list kubelet yum remove kubelet yum install kubelet-1.19.16-0 yum install kubeadm-1.19.16Image

shell### Sync Master Image (sync.sh) ### echo "" # Init Parameters MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/google_containers # MY_REGISTRY=mirrorgcrio VERSION=$(kubeadm config images list | grep -m1 kube | awk -F: '{print $2}') ETCD_VERSION=$(kubeadm config images list | grep -m1 etcd | awk -F: '{print $2}') PAUSE_VERSION=$(kubeadm config images list | grep -m1 pause | awk -F: '{print $2}') COREDNS_VERSION=$(kubeadm config images list | grep -m1 coredns | awk -F: '{print $2}') echo "===================================================================" echo "FewBox Pull Kubernetes "$VERSION" Images from "$MY_REGISTRY" ......" echo "===================================================================" echo "" kubeadm config images list # Pull Image docker pull ${MY_REGISTRY}/kube-apiserver:$VERSION docker pull ${MY_REGISTRY}/kube-controller-manager:$VERSION docker pull ${MY_REGISTRY}/kube-scheduler:$VERSION docker pull ${MY_REGISTRY}/kube-proxy:$VERSION docker pull ${MY_REGISTRY}/etcd:$ETCD_VERSION docker pull ${MY_REGISTRY}/pause:$PAUSE_VERSION docker pull ${MY_REGISTRY}/coredns:$COREDNS_VERSION # Add Tag docker tag ${MY_REGISTRY}/kube-apiserver:$VERSION k8s.gcr.io/kube-apiserver:$VERSION docker tag ${MY_REGISTRY}/kube-scheduler:$VERSION k8s.gcr.io/kube-scheduler:$VERSION docker tag ${MY_REGISTRY}/kube-controller-manager:$VERSION k8s.gcr.io/kube-controller-manager:$VERSION docker tag ${MY_REGISTRY}/kube-proxy:$VERSION k8s.gcr.io/kube-proxy:$VERSION docker tag ${MY_REGISTRY}/etcd:$ETCD_VERSION k8s.gcr.io/etcd:$ETCD_VERSION docker tag ${MY_REGISTRY}/pause:$PAUSE_VERSION k8s.gcr.io/pause:$PAUSE_VERSION docker tag ${MY_REGISTRY}/coredns:$COREDNS_VERSION k8s.gcr.io/coredns:$COREDNS_VERSION echo "" echo "==================================================" echo "FewBox Pull Kubernetes "$VERSION" Images FINISHED." echo "==================================================" echo ""shell### Sync Worker Image (sync.sh) ### echo "" # Init Parameters MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/google_containers # MY_REGISTRY=mirrorgcrio VERSION=$(kubeadm config images list | grep -m1 kube | awk -F: '{print $2}') ETCD_VERSION=$(kubeadm config images list | grep -m1 etcd | awk -F: '{print $2}') PAUSE_VERSION=$(kubeadm config images list | grep -m1 pause | awk -F: '{print $2}') COREDNS_VERSION=$(kubeadm config images list | grep -m1 coredns | awk -F: '{print $2}') echo "===================================================================" echo "FewBox Pull Kubernetes "$VERSION" Images from "$MY_REGISTRY" ......" echo "===================================================================" echo "" kubeadm config images list # Pull Image docker pull ${MY_REGISTRY}/kube-proxy:$VERSION docker pull ${MY_REGISTRY}/pause:$PAUSE_VERSION docker pull ${MY_REGISTRY}/coredns:$COREDNS_VERSION # Add Tag docker tag ${MY_REGISTRY}/kube-proxy:$VERSION k8s.gcr.io/kube-proxy:$VERSION docker tag ${MY_REGISTRY}/pause:$PAUSE_VERSION k8s.gcr.io/pause:$PAUSE_VERSION docker tag ${MY_REGISTRY}/coredns:$COREDNS_VERSION k8s.gcr.io/coredns:$COREDNS_VERSION echo "" echo "==================================================" echo "FewBox Pull Kubernetes "$VERSION" Images FINISHED." echo "==================================================" echo ""Cluster Network

shell### Setting (Network) ### # Firewall systemctl stop firewalld systemctl disable firewalld # Set SELinux in permissive mode (effectively disabling it) sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config swapoff -a sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab # Bridge cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF # Check sysctl --systemshell### Agent (Kubelet) ### systemctl daemon-reload systemctl enable kubelet && systemctl restart kubelet journalctl -xefu kubeletKubernetes

shell### Install Master ### # Multiple Eth need --apiserver-advertise-address kubeadm init --pod-network-cidr=10.244.0.0/16 --kubernetes-version=1.19.16shell### Install Worker ### # Print join script kubeadm token create --print-join-command # Join master kubeadm join 172.17.0.1:6443 --token vv4prq.ezm481v2k0oa0273 --discovery-token-ca-cert-hash sha256:bd34337dcab22ce02049d1f98dc73a96729f1a3b9c9d80a3d76e175af1db4e21shell### Network (Flannel) ### # Download wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml # Change "net-conf.json > Network" to kubernetes podCIDR 10.244.0.0/16 kubectl cluster-info dump | grep -m 1 cluster-cidr vi kube-flannel.yml # kubectl apply -f kube-flannel.ymlTest

shell# start.sh export KUBECONFIG=/etc/kubernetes/admin.conf alias k=kubectl #~/.bashrc source <(kubectl completion bash) source <(kubectl completion bash | sed s/kubectl/k/g) alias kcd='kubectl config set-context $(kubectl config current-context) --namespace' export CALICO_DATASTORE_TYPE=kubernetes export CALICO_KUBECONFIG=/etc/kubernetes/admin.conf # export PATH=$PATH:/root/istio-1.6.2/bin/shell# Enable tab help yum -y install bash-completion bash /usr/share/bash-completion/bash_completion bashshell### Check Kubernetes Status ### k get cs # scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused # controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused # Solution: need to remove "--port=0" in kube-controller-manager.yaml kube-scheduler.yaml /etc/kubernetes/manifests systemctl restart kubelet.serviceshell### Resource ### kubectl describe node kubectl describe node |grep -E '((Name|Roles):\s{6,})|(\s+(memory|cpu)\s+[0-9]+\w{0,2}.+%\))'shell### Clean ### kubectl drain <node name> --delete-local-data --force --ignore-daemonsets kubeadm reset cd /etc/cni/net.d/ # remove /etc/cni/net.d/ lsof -i :6443|grep -v "PID"|awk '{print "kill -9",$2}'|sh iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X ipvsadm -C kubectl delete node <node name>shell### Enable Master Deploy ### kubectl taint nodes --all node-role.kubernetes.io/master-shell### Metric ### docker pull bitnami/metrics-server:0.6.1 docker tag bitnami/metrics-server:0.6.1 k8s.gcr.io/metrics-server/metrics-server:v0.6.1 kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlyaml### Disable Metric Security ### spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 command: - /metrics-server - --kubelet-insecure-tls=true - --kubelet-preferred-address-types=InternalIP image: k8s.gcr.io/metrics-server/metrics-server:v0.3.7yaml### Disable Metric Security ### args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --kubelet-insecure-tls # Add this line (New Version).shell### Check Metric ### kubectl top node kubectl top pod -

Install Istio

shell### Install ### curl -L https://istio.io/downloadIstio | sh - istioctl installshell### Addon ### kubectl apply -f ./samples/addons/prometheus.yaml kubectl apply -f ./samples/addons/grafana.yaml kubectl apply -f ./samples/addons/jaeger.yaml kubectl apply -f ./samples/addons/kiali.yamlshell### Profile ### # istioctl install --set profile=demo istioctl profile list istioctl profile dump default istioctl profile diff default demoshell### Verify ### istioctl manifest generate > $HOME/generated-manifest.yaml istioctl verify-install -f $HOME/generated-manifest.yamlshell### Uninstall ### # All istioctl x uninstall --purge # Control Panel istioctl x uninstall <your original installation options> istioctl manifest generate <your original installation options> | kubectl delete -f - # Namespace kubectl delete namespace istio-system # -

Install NFS

NFS

shell### Install ### yum -y install nfs-utils rpcbind mkdir -p /data/k8s chmod 755 /data/k8s vi /etc/exports # /etc/exports # /data/k8s 192.168.1.0/24(rw,sync,no_root_squash) // /data/k8s *(rw,sync,no_root_squash) curl -v telnet://192.168.1.38:2049shell### Start Service ### systemctl enable rpcbind systemctl enable nfs systemctl start rpcbind systemctl start nfs rpcinfo -p exportfs -r exportfsKubernetes

shell# IMPORTANT!!! Need to install in the master(optional) and worker node. yum install nfs-utilsTest

shell### Client ### yum install nfs-common nfs-utils -yshell### Mount & Umount ### mount -t nfs 192.168.1.38:/NFS/k8s /mount/nfs # Master and Worker Nodes, All point to NFS Server. # mount -t nfs 192.168.1.38:/data/k8s /mount/nfs # Master and Worker Nodes, All point to NFS Server. # mount -t nfs 192.168.1.38:/NFS/nginx /mount/nfs curl -v telnet://192.168.1.38:2049 umount -f -l /mount/data/k8s -

Install Nginx

shell### Install ### yum install nginx # Setting firewall systemctl status firewalld firewall-cmd --zone=public --add-port=80/tcp --permanent firewall-cmd --reloadini#user nginx; #user root; # Permission Issue. #Defines which Linux system user will own and run the Nginx server worker_processes 1; #Referes to single threaded process. Generally set to be equal to the number of CPUs or cores. #error_log logs/error.log; #error_log logs/error.log notice; #Specifies the file where server logs. #pid logs/nginx.pid; #nginx will write its master process ID(PID). events { worker_connections 1024; # worker_processes and worker_connections allows you to calculate maxclients value: # max_clients = worker_processes * worker_connections } http { ssl_certificate /etc/ssl/fewbox.crt; ssl_certificate_key /etc/ssl/fewbox.key; upstream istio { server 172.17.6.3:30815; server 172.17.6.4:30815; server 172.17.6.5:30815; } include mime.types; # anything written in /opt/nginx/conf/mime.types is interpreted as if written inside the http { } block default_type application/octet-stream; # fastcgi_connect_timeout 600; fastcgi_send_timeout 600; fastcgi_read_timeout 600; client_max_body_size 200M; proxy_connect_timeout 600; proxy_send_timeout 600; proxy_read_timeout 600; proxy_http_version 1.1; send_timeout 600; #log_format main '$remote_addr - $remote_user [$time_local] "$request" ' # '$status $body_bytes_sent "$http_referer" ' # '"$http_user_agent" "$http_x_forwarded_for"'; #access_log logs/access.log main; sendfile on; # If serving locally stored static files, sendfile is essential to speed up the server, # But if using as reverse proxy one can deactivate it #tcp_nopush on; # works opposite to tcp_nodelay. Instead of optimizing delays, it optimizes the amount of data sent at once. #keepalive_timeout 0; keepalive_timeout 65; # timeout during which a keep-alive client connection will stay open. gzip on; gzip_min_length 1k; gzip_buffers 4 16k; gzip_comp_level 5; gzip_types gzip_types text/plain application/javascript application/x-javascript text/css application/xml text/javascript application/x-httpd-php; gzip_http_version 1.1; gzip_vary on; gzip_proxied any; gzip_disable msie6; # tells the server to use on-the-fly gzip compression. server { # You would want to make a separate file with its own server block for each virtual domain # on your server and then include them. listen 80; #tells Nginx the hostname and the TCP port where it should listen for HTTP connections. # listen 80; is equivalent to listen *:80; server_name _; # lets you doname-based virtual hosting charset utf-8; #access_log logs/host.access.log main; return 302 https://$server_name$request_uri; #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} } server { listen 443 ssl; server_name _; proxy_set_header Host $host; proxy_set_header X-Forwarded-For $remote_addr; location / { proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $host:$server_port; proxy_set_header X-NginX-Proxy true; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; proxy_pass http://istio; } } server { listen 8443 ssl; server_name _; proxy_set_header Host $host; proxy_set_header X-Forwarded-For $remote_addr; location / { proxy_pass https://172.17.6.3:6443; # Your Api Server Address proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } } server { # You would want to make a separate file with its own server block for each virtual domain # on your server and then include them. listen 7443 ssl; #tells Nginx the hostname and the TCP port where it should listen for HTTP connections. # listen 80; is equivalent to listen *:80; server_name _; # lets you doname-based virtual hosting charset utf-8; #access_log logs/host.access.log main; location / { #The location setting lets you configure how nginx responds to requests for resources within the server. #root /usr/share/nginx/html; #index index.html index.htm; #try_files $uri /index.html; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $host:$server_port; proxy_set_header X-NginX-Proxy true; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; proxy_pass http://istio; #proxy_set_header Host $host; #proxy_set_header X-Forwarded-For $remote_addr; #proxy_pass http://istio; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} } # another virtual host using mix of IP-, name-, and port-based configuration # #server { # listen 8000; # listen somename:8080; # server_name somename alias another.alias; # location / { # root html; # index index.html index.htm; # } #} } -

Install SSL

Let's Encrypt (https://letsencrypt.org/)

Cerbot (https://certbot.eff.org/)

shell# Generate SSL private key with password (fewbox-pw.key) openssl genrsa -des3 -out fewbox-pw.key 2048 # View private key with password openssl rsa -text -in fewbox-pw.key # View private key with password cat fewbox-pw.key # Generate CSR (fewbox.csr) Certificate Signing Request openssl req -new -key fewbox-pw.key -out fewbox.csr # View CSR openssl req -text -in fewbox.csr -noout # Generate CRT (fewbox.crt) Certificate openssl x509 -req -days 365 -in fewbox.csr -signkey fewbox-pw.key -out fewbox.crt # Generate SSL private key openssl rsa -in fewbox-pw.key -out fewbox.key -

Setting Firewalld

shell# View status systemctl status firewalld # Reboot firewall-cmd --reload # Power on start systemctl enable firewalld # Disable power on start sytemctl disable firewalld # Start systemctl start firewalld # Stop systemctl stop firewalld # View zone firewall-cmd --get-active-zones firewall-cmd --get-zone-of-interface=enp0s3 # View refuse pack status firewall-cmd --query-panic # Refuse all pack firewall-cmd --panic-on # Allow all pack firewall-cmd --panic-off # View port firewall-cmd --zone=public --list-ports # Open port firewall-cmd --zone=public --add-port=80/tcp --permanent firewall-cmd --reload # Others # firewall-cmd --zone=public --add-interface=eth0 (FOREVER add '--permanent' and then reload firewall) # firewall-cmd --set-default-zone=public (Valiable immediately, no need rebot) # firewall-cmd --reload orfirewall-cmd --complete-reload (No1 no need diconnect, No2 need disconnect as reboot) -

Install FRP (Optional)

Download

shell# https://github.com/fatedier/frp/releases # amd64 wget https://github.com/fatedier/frp/releases/download/v0.44.0/frp_0.44.0_linux_amd64.tar.gzServer (with public network IP)

ini# https://gofrp.org/docs/examples/ frps.ini [common] bind_port = 7000 vhost_http_port = 8080 vhost_https_port = 8081 log_file = console dashboard_addr = 0.0.0.0 dashboard_port = 7500 dashboard_user = fewbox dashboard_pwd = {Your Pwd} token = {Your Token} max_pool_count = 50shellvi /etc/systemd/system/frps.serviceini[Unit] Description = frp server After = network.target syslog.target Wants = network.target [Service] Type = simple ExecStart = /path/to/frps -c /path/to/frps.ini [Install] WantedBy = multi-user.targetshellsystemctl start frps systemctl stop frps systemctl restart frps systemctl status frps systemctl enable frpsClient (Internal Cluster Egress)

shell# Pull image docker pull fatedier/frpcini[common] server_addr = {Server IP} server_port = 7000 token = {Your Token} [web] type = http local_ip = {Local IP: EG. nginx} local_port = 5000 custom_domains = *.fewbox.comNAS

Command: -c /frpc/frpc.ini

Endpoint: /usr/bin/frpc

Share Folder: /DevOps/frpc /frpc

-

Install esxi (Optional)

https://www.dell.com/support/home/en-us/drivers/driversdetails?driverid=gn51y&lwp=rt

-

Install Packing Table

Domain, NFS, Namespace, Service Account, Cluster Role Binding, Packing Table

shell# Install . ./fewbox-install.shTest

shell# Check k get ns fewbox-system k get sa fewbox k get clusterrolebinding fewbox # cluster-admin - fewbox/fewbox-admin k get cm packingtable k get crd packingtables.fewbox.com k get deploy packingtable

-

Install Infrastructure

Namespace, Service Account, Cluster Role, Cluster Role Binding, NFS Provisioner, NFS Storage Class

shellk create -f ./component/infrastructure/fewbox-infrastructure.yamlTest

shellkcd fewbox k get ns fewbox k get sa nfs-client-provisioner k get clusterrole nfs-client-provisioner-runner k get clusterrolebinding run-nfs-client-provisioner-fewbox k get deploy nfs-client-provisioner k get sc managed-nfs-storage

-

Install ThirdParty

RabbitMQ: CRD, ClusterRole, Service Account, Role Binding, Cluster Role Binding, Deployment, Virtual Service, RMQ

MySQL: PVC, Deployment, Service, Destination Rule, Virtual Service

Redis: PVC, Deployment, Service, Destination Rule, Virtual Service

MongoDB: PVC, Deployment, Service, Destination Rule, Virtual Service

shellk create -f ./component/thirdparty/fewbox-thirdparty.yamlTest

shellk get pvc mysql k get pvc npmregistry k get pvc redis k get pv # From PVC #k get cm npmregistry k get cm rabbitmq-cluster-operator-leader-election #?? k get deploy mysql k get deploy nfs-client-provisioner #k get deploy npmregistry k get deploy rabbitmq-cluster-operator k get deploy redis k get svc mysql k get svc npmregistry k get svc redis k get dr mysql #k get dr npmregistry k get dr redis k get vs mysql #k get vs npmregistry k get vs redis k get vs rabbitmq-client k get crd rabbitmqclusters.rabbitmq.com k get clusterrole rabbitmq-cluster-operator-role k get sa rabbitmq-cluster-operator k get rolebinding rabbitmq-cluster-leader-election-rolebinding # rabbitmq-cluster-leader-election-role - rabbitmq-cluster-operator k get clusterrolebinding rabbitmq-cluster-operator-rolebinding-fewbox k get vs rabbitmq-client k get rmq fewboxshell# Test MySQL k exec -it fewbox-mysql-0 bash # SQL > # mysqlsh fewbox@localhost --sql # set global read_only=0; k port-forward service/fewbox-mysql-instances mysql

-

Install Foundation

shell# DB Schema k create -f ./component/foundation/fewbox-foundation-schema.yaml # App k create -f ./component/foundation/fewbox-foundation.yamlTest

shellk get svc -n fewbox # auth, mail, payment, realtime, shipping, shipping-app, shipping-gateway

About Us

Founded by an older guy who has worked as an architect for large & startup companies (Lenovo, JD, BoostSolutions), but now I am creating a small and beautiful company.

We’re building the smart kit for customers who want to be smart.

Getting Start

This quick guide will show you how to use FewBox to manage, install & un-install your microservice on your cloud-native platform. You'll also get a few simple pointers on setting yourself up for productivity.

Overview

Prerequisites

-

Install Docker

shell### Remove old version ### yum remove docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-selinux \ docker-engine-selinux \ docker-engineshell### Install ### yum install yum-utils yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum install docker-ce systemctl enable docker && systemctl start dockershell### Setting ### cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "registry-mirrors": [ "https://hub-mirror.c.163.com", "https://mirror.baidubce.com" ], "insecure-registries": ["192.168.1.38:5000"] } EOF # Check docker info | grep Cgroup -

Install Kubernetes

Kubeadm

shellvi /etc/yum.repos.d/kubernetes.repoini[kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0shell### Install ### # kubelet # yum --showduplicates list kubelet yum remove kubelet yum install kubelet-1.19.16-0 yum install kubeadm-1.19.16Image

shell### Sync Master Image (sync.sh) ### echo "" # Init Parameters MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/google_containers # MY_REGISTRY=mirrorgcrio VERSION=$(kubeadm config images list | grep -m1 kube | awk -F: '{print $2}') ETCD_VERSION=$(kubeadm config images list | grep -m1 etcd | awk -F: '{print $2}') PAUSE_VERSION=$(kubeadm config images list | grep -m1 pause | awk -F: '{print $2}') COREDNS_VERSION=$(kubeadm config images list | grep -m1 coredns | awk -F: '{print $2}') echo "===================================================================" echo "FewBox Pull Kubernetes "$VERSION" Images from "$MY_REGISTRY" ......" echo "===================================================================" echo "" kubeadm config images list # Pull Image docker pull ${MY_REGISTRY}/kube-apiserver:$VERSION docker pull ${MY_REGISTRY}/kube-controller-manager:$VERSION docker pull ${MY_REGISTRY}/kube-scheduler:$VERSION docker pull ${MY_REGISTRY}/kube-proxy:$VERSION docker pull ${MY_REGISTRY}/etcd:$ETCD_VERSION docker pull ${MY_REGISTRY}/pause:$PAUSE_VERSION docker pull ${MY_REGISTRY}/coredns:$COREDNS_VERSION # Add Tag docker tag ${MY_REGISTRY}/kube-apiserver:$VERSION k8s.gcr.io/kube-apiserver:$VERSION docker tag ${MY_REGISTRY}/kube-scheduler:$VERSION k8s.gcr.io/kube-scheduler:$VERSION docker tag ${MY_REGISTRY}/kube-controller-manager:$VERSION k8s.gcr.io/kube-controller-manager:$VERSION docker tag ${MY_REGISTRY}/kube-proxy:$VERSION k8s.gcr.io/kube-proxy:$VERSION docker tag ${MY_REGISTRY}/etcd:$ETCD_VERSION k8s.gcr.io/etcd:$ETCD_VERSION docker tag ${MY_REGISTRY}/pause:$PAUSE_VERSION k8s.gcr.io/pause:$PAUSE_VERSION docker tag ${MY_REGISTRY}/coredns:$COREDNS_VERSION k8s.gcr.io/coredns:$COREDNS_VERSION echo "" echo "==================================================" echo "FewBox Pull Kubernetes "$VERSION" Images FINISHED." echo "==================================================" echo ""shell### Sync Worker Image (sync.sh) ### echo "" # Init Parameters MY_REGISTRY=registry.cn-hangzhou.aliyuncs.com/google_containers # MY_REGISTRY=mirrorgcrio VERSION=$(kubeadm config images list | grep -m1 kube | awk -F: '{print $2}') ETCD_VERSION=$(kubeadm config images list | grep -m1 etcd | awk -F: '{print $2}') PAUSE_VERSION=$(kubeadm config images list | grep -m1 pause | awk -F: '{print $2}') COREDNS_VERSION=$(kubeadm config images list | grep -m1 coredns | awk -F: '{print $2}') echo "===================================================================" echo "FewBox Pull Kubernetes "$VERSION" Images from "$MY_REGISTRY" ......" echo "===================================================================" echo "" kubeadm config images list # Pull Image docker pull ${MY_REGISTRY}/kube-proxy:$VERSION docker pull ${MY_REGISTRY}/pause:$PAUSE_VERSION docker pull ${MY_REGISTRY}/coredns:$COREDNS_VERSION # Add Tag docker tag ${MY_REGISTRY}/kube-proxy:$VERSION k8s.gcr.io/kube-proxy:$VERSION docker tag ${MY_REGISTRY}/pause:$PAUSE_VERSION k8s.gcr.io/pause:$PAUSE_VERSION docker tag ${MY_REGISTRY}/coredns:$COREDNS_VERSION k8s.gcr.io/coredns:$COREDNS_VERSION echo "" echo "==================================================" echo "FewBox Pull Kubernetes "$VERSION" Images FINISHED." echo "==================================================" echo ""Cluster Network

shell### Setting (Network) ### # Firewall systemctl stop firewalld systemctl disable firewalld # Set SELinux in permissive mode (effectively disabling it) sudo setenforce 0 sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config swapoff -a sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab # Bridge cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF # Check sysctl --systemshell### Agent (Kubelet) ### systemctl daemon-reload systemctl enable kubelet && systemctl restart kubelet journalctl -xefu kubeletKubernetes

shell### Install Master ### # Multiple Eth need --apiserver-advertise-address kubeadm init --pod-network-cidr=10.244.0.0/16 --kubernetes-version=1.19.16shell### Install Worker ### # Print join script kubeadm token create --print-join-command # Join master kubeadm join 172.17.0.1:6443 --token vv4prq.ezm481v2k0oa0273 --discovery-token-ca-cert-hash sha256:bd34337dcab22ce02049d1f98dc73a96729f1a3b9c9d80a3d76e175af1db4e21shell### Network (Flannel) ### # Download wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml # Change "net-conf.json > Network" to kubernetes podCIDR 10.244.0.0/16 kubectl cluster-info dump | grep -m 1 cluster-cidr vi kube-flannel.yml # kubectl apply -f kube-flannel.ymlTest

shell# start.sh export KUBECONFIG=/etc/kubernetes/admin.conf alias k=kubectl #~/.bashrc source <(kubectl completion bash) source <(kubectl completion bash | sed s/kubectl/k/g) alias kcd='kubectl config set-context $(kubectl config current-context) --namespace' export CALICO_DATASTORE_TYPE=kubernetes export CALICO_KUBECONFIG=/etc/kubernetes/admin.conf # export PATH=$PATH:/root/istio-1.6.2/bin/shell# Enable tab help yum -y install bash-completion bash /usr/share/bash-completion/bash_completion bashshell### Check Kubernetes Status ### k get cs # scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused # controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused # Solution: need to remove "--port=0" in kube-controller-manager.yaml kube-scheduler.yaml /etc/kubernetes/manifests systemctl restart kubelet.serviceshell### Resource ### kubectl describe node kubectl describe node |grep -E '((Name|Roles):\s{6,})|(\s+(memory|cpu)\s+[0-9]+\w{0,2}.+%\))'shell### Clean ### kubectl drain <node name> --delete-local-data --force --ignore-daemonsets kubeadm reset cd /etc/cni/net.d/ # remove /etc/cni/net.d/ lsof -i :6443|grep -v "PID"|awk '{print "kill -9",$2}'|sh iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X ipvsadm -C kubectl delete node <node name>shell### Enable Master Deploy ### kubectl taint nodes --all node-role.kubernetes.io/master-shell### Metric ### docker pull bitnami/metrics-server:0.6.1 docker tag bitnami/metrics-server:0.6.1 k8s.gcr.io/metrics-server/metrics-server:v0.6.1 kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yamlyaml### Disable Metric Security ### spec: containers: - args: - --cert-dir=/tmp - --secure-port=4443 command: - /metrics-server - --kubelet-insecure-tls=true - --kubelet-preferred-address-types=InternalIP image: k8s.gcr.io/metrics-server/metrics-server:v0.3.7yaml### Disable Metric Security ### args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --kubelet-use-node-status-port - --kubelet-insecure-tls # Add this line (New Version).shell### Check Metric ### kubectl top node kubectl top pod -

Install Istio

shell### Install ### curl -L https://istio.io/downloadIstio | sh - istioctl installshell### Addon ### kubectl apply -f ./samples/addons/prometheus.yaml kubectl apply -f ./samples/addons/grafana.yaml kubectl apply -f ./samples/addons/jaeger.yaml kubectl apply -f ./samples/addons/kiali.yamlshell### Profile ### # istioctl install --set profile=demo istioctl profile list istioctl profile dump default istioctl profile diff default demoshell### Verify ### istioctl manifest generate > $HOME/generated-manifest.yaml istioctl verify-install -f $HOME/generated-manifest.yamlshell### Uninstall ### # All istioctl x uninstall --purge # Control Panel istioctl x uninstall <your original installation options> istioctl manifest generate <your original installation options> | kubectl delete -f - # Namespace kubectl delete namespace istio-system # -

Install NFS

NFS

shell### Install ### yum -y install nfs-utils rpcbind mkdir -p /data/k8s chmod 755 /data/k8s vi /etc/exports # /etc/exports # /data/k8s 192.168.1.0/24(rw,sync,no_root_squash) // /data/k8s *(rw,sync,no_root_squash) curl -v telnet://192.168.1.38:2049shell### Start Service ### systemctl enable rpcbind systemctl enable nfs systemctl start rpcbind systemctl start nfs rpcinfo -p exportfs -r exportfsKubernetes

shell# IMPORTANT!!! Need to install in the master(optional) and worker node. yum install nfs-utilsTest

shell### Client ### yum install nfs-common nfs-utils -yshell### Mount & Umount ### mount -t nfs 192.168.1.38:/NFS/k8s /mount/nfs # Master and Worker Nodes, All point to NFS Server. # mount -t nfs 192.168.1.38:/data/k8s /mount/nfs # Master and Worker Nodes, All point to NFS Server. # mount -t nfs 192.168.1.38:/NFS/nginx /mount/nfs curl -v telnet://192.168.1.38:2049 umount -f -l /mount/data/k8s -

Install Nginx

shell### Install ### yum install nginx # Setting firewall systemctl status firewalld firewall-cmd --zone=public --add-port=80/tcp --permanent firewall-cmd --reloadini#user nginx; #user root; # Permission Issue. #Defines which Linux system user will own and run the Nginx server worker_processes 1; #Referes to single threaded process. Generally set to be equal to the number of CPUs or cores. #error_log logs/error.log; #error_log logs/error.log notice; #Specifies the file where server logs. #pid logs/nginx.pid; #nginx will write its master process ID(PID). events { worker_connections 1024; # worker_processes and worker_connections allows you to calculate maxclients value: # max_clients = worker_processes * worker_connections } http { ssl_certificate /etc/ssl/fewbox.crt; ssl_certificate_key /etc/ssl/fewbox.key; upstream istio { server 172.17.6.3:30815; server 172.17.6.4:30815; server 172.17.6.5:30815; } include mime.types; # anything written in /opt/nginx/conf/mime.types is interpreted as if written inside the http { } block default_type application/octet-stream; # fastcgi_connect_timeout 600; fastcgi_send_timeout 600; fastcgi_read_timeout 600; client_max_body_size 200M; proxy_connect_timeout 600; proxy_send_timeout 600; proxy_read_timeout 600; proxy_http_version 1.1; send_timeout 600; #log_format main '$remote_addr - $remote_user [$time_local] "$request" ' # '$status $body_bytes_sent "$http_referer" ' # '"$http_user_agent" "$http_x_forwarded_for"'; #access_log logs/access.log main; sendfile on; # If serving locally stored static files, sendfile is essential to speed up the server, # But if using as reverse proxy one can deactivate it #tcp_nopush on; # works opposite to tcp_nodelay. Instead of optimizing delays, it optimizes the amount of data sent at once. #keepalive_timeout 0; keepalive_timeout 65; # timeout during which a keep-alive client connection will stay open. gzip on; gzip_min_length 1k; gzip_buffers 4 16k; gzip_comp_level 5; gzip_types gzip_types text/plain application/javascript application/x-javascript text/css application/xml text/javascript application/x-httpd-php; gzip_http_version 1.1; gzip_vary on; gzip_proxied any; gzip_disable msie6; # tells the server to use on-the-fly gzip compression. server { # You would want to make a separate file with its own server block for each virtual domain # on your server and then include them. listen 80; #tells Nginx the hostname and the TCP port where it should listen for HTTP connections. # listen 80; is equivalent to listen *:80; server_name _; # lets you doname-based virtual hosting charset utf-8; #access_log logs/host.access.log main; return 302 https://$server_name$request_uri; #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} } server { listen 443 ssl; server_name _; proxy_set_header Host $host; proxy_set_header X-Forwarded-For $remote_addr; location / { proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $host:$server_port; proxy_set_header X-NginX-Proxy true; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; proxy_pass http://istio; } } server { listen 8443 ssl; server_name _; proxy_set_header Host $host; proxy_set_header X-Forwarded-For $remote_addr; location / { proxy_pass https://172.17.6.3:6443; # Your Api Server Address proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; } } server { # You would want to make a separate file with its own server block for each virtual domain # on your server and then include them. listen 7443 ssl; #tells Nginx the hostname and the TCP port where it should listen for HTTP connections. # listen 80; is equivalent to listen *:80; server_name _; # lets you doname-based virtual hosting charset utf-8; #access_log logs/host.access.log main; location / { #The location setting lets you configure how nginx responds to requests for resources within the server. #root /usr/share/nginx/html; #index index.html index.htm; #try_files $uri /index.html; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header Host $host:$server_port; proxy_set_header X-NginX-Proxy true; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade"; proxy_pass http://istio; #proxy_set_header Host $host; #proxy_set_header X-Forwarded-For $remote_addr; #proxy_pass http://istio; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } # proxy the PHP scripts to Apache listening on 127.0.0.1:80 # #location ~ \.php$ { # proxy_pass http://127.0.0.1; #} # pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000 # #location ~ \.php$ { # root html; # fastcgi_pass 127.0.0.1:9000; # fastcgi_index index.php; # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name; # include fastcgi_params; #} # deny access to .htaccess files, if Apache's document root # concurs with nginx's one # #location ~ /\.ht { # deny all; #} } # another virtual host using mix of IP-, name-, and port-based configuration # #server { # listen 8000; # listen somename:8080; # server_name somename alias another.alias; # location / { # root html; # index index.html index.htm; # } #} } -

Install SSL

Let's Encrypt (https://letsencrypt.org/)

Cerbot (https://certbot.eff.org/)

shell# Generate SSL private key with password (fewbox-pw.key) openssl genrsa -des3 -out fewbox-pw.key 2048 # View private key with password openssl rsa -text -in fewbox-pw.key # View private key with password cat fewbox-pw.key # Generate CSR (fewbox.csr) Certificate Signing Request openssl req -new -key fewbox-pw.key -out fewbox.csr # View CSR openssl req -text -in fewbox.csr -noout # Generate CRT (fewbox.crt) Certificate openssl x509 -req -days 365 -in fewbox.csr -signkey fewbox-pw.key -out fewbox.crt # Generate SSL private key openssl rsa -in fewbox-pw.key -out fewbox.key -

Setting Firewalld

shell# View status systemctl status firewalld # Reboot firewall-cmd --reload # Power on start systemctl enable firewalld # Disable power on start sytemctl disable firewalld # Start systemctl start firewalld # Stop systemctl stop firewalld # View zone firewall-cmd --get-active-zones firewall-cmd --get-zone-of-interface=enp0s3 # View refuse pack status firewall-cmd --query-panic # Refuse all pack firewall-cmd --panic-on # Allow all pack firewall-cmd --panic-off # View port firewall-cmd --zone=public --list-ports # Open port firewall-cmd --zone=public --add-port=80/tcp --permanent firewall-cmd --reload # Others # firewall-cmd --zone=public --add-interface=eth0 (FOREVER add '--permanent' and then reload firewall) # firewall-cmd --set-default-zone=public (Valiable immediately, no need rebot) # firewall-cmd --reload orfirewall-cmd --complete-reload (No1 no need diconnect, No2 need disconnect as reboot) -

Install FRP (Optional)

Download

shell# https://github.com/fatedier/frp/releases # amd64 wget https://github.com/fatedier/frp/releases/download/v0.44.0/frp_0.44.0_linux_amd64.tar.gzServer (with public network IP)

ini# https://gofrp.org/docs/examples/ frps.ini [common] bind_port = 7000 vhost_http_port = 8080 vhost_https_port = 8081 log_file = console dashboard_addr = 0.0.0.0 dashboard_port = 7500 dashboard_user = fewbox dashboard_pwd = {Your Pwd} token = {Your Token} max_pool_count = 50shellvi /etc/systemd/system/frps.serviceini[Unit] Description = frp server After = network.target syslog.target Wants = network.target [Service] Type = simple ExecStart = /path/to/frps -c /path/to/frps.ini [Install] WantedBy = multi-user.targetshellsystemctl start frps systemctl stop frps systemctl restart frps systemctl status frps systemctl enable frpsClient (Internal Cluster Egress)

shell# Pull image docker pull fatedier/frpcini[common] server_addr = {Server IP} server_port = 7000 token = {Your Token} [web] type = http local_ip = {Local IP: EG. nginx} local_port = 5000 custom_domains = *.fewbox.comNAS

Command: -c /frpc/frpc.ini

Endpoint: /usr/bin/frpc

Share Folder: /DevOps/frpc /frpc

-

Install esxi (Optional)

https://www.dell.com/support/home/en-us/drivers/driversdetails?driverid=gn51y&lwp=rt

-

Install Packing Table

Domain, NFS, Namespace, Service Account, Cluster Role Binding, Packing Table

shell# Install . ./fewbox-install.shTest

shell# Check k get ns fewbox-system k get sa fewbox k get clusterrolebinding fewbox # cluster-admin - fewbox/fewbox-admin k get cm packingtable k get crd packingtables.fewbox.com k get deploy packingtable

-

Install Infrastructure

Namespace, Service Account, Cluster Role, Cluster Role Binding, NFS Provisioner, NFS Storage Class

shellk create -f ./component/infrastructure/fewbox-infrastructure.yamlTest

shellkcd fewbox k get ns fewbox k get sa nfs-client-provisioner k get clusterrole nfs-client-provisioner-runner k get clusterrolebinding run-nfs-client-provisioner-fewbox k get deploy nfs-client-provisioner k get sc managed-nfs-storage

-

Install ThirdParty

RabbitMQ: CRD, ClusterRole, Service Account, Role Binding, Cluster Role Binding, Deployment, Virtual Service, RMQ

MySQL: PVC, Deployment, Service, Destination Rule, Virtual Service

Redis: PVC, Deployment, Service, Destination Rule, Virtual Service

MongoDB: PVC, Deployment, Service, Destination Rule, Virtual Service

shellk create -f ./component/thirdparty/fewbox-thirdparty.yamlTest

shellk get pvc mysql k get pvc npmregistry k get pvc redis k get pv # From PVC #k get cm npmregistry k get cm rabbitmq-cluster-operator-leader-election #?? k get deploy mysql k get deploy nfs-client-provisioner #k get deploy npmregistry k get deploy rabbitmq-cluster-operator k get deploy redis k get svc mysql k get svc npmregistry k get svc redis k get dr mysql #k get dr npmregistry k get dr redis k get vs mysql #k get vs npmregistry k get vs redis k get vs rabbitmq-client k get crd rabbitmqclusters.rabbitmq.com k get clusterrole rabbitmq-cluster-operator-role k get sa rabbitmq-cluster-operator k get rolebinding rabbitmq-cluster-leader-election-rolebinding # rabbitmq-cluster-leader-election-role - rabbitmq-cluster-operator k get clusterrolebinding rabbitmq-cluster-operator-rolebinding-fewbox k get vs rabbitmq-client k get rmq fewboxshell# Test MySQL k exec -it fewbox-mysql-0 bash # SQL > # mysqlsh fewbox@localhost --sql # set global read_only=0; k port-forward service/fewbox-mysql-instances mysql

-

Install Foundation

shell# DB Schema k create -f ./component/foundation/fewbox-foundation-schema.yaml # App k create -f ./component/foundation/fewbox-foundation.yamlTest

shellk get svc -n fewbox # auth, mail, payment, realtime, shipping, shipping-app, shipping-gateway

About Us

Founded by an older guy who has worked as an architect for large & startup companies (Lenovo, JD, BoostSolutions), but now I am creating a small and beautiful company.

We’re building the smart kit for customers who want to be smart.